Stream Aggregation

Streaming Aggregation

In this module, you’ll create an Amazon Kinesis Data Analytics application to aggregate sensor data from the unicorn fleet in real-time. The application will read from the Amazon Kinesis stream, calculate the total distance traveled and minimum and maximum health and magic points for each unicorn currently on a Wild Ryde and output these aggregated statistics to an Amazon Kinesis stream every minute. In the first section, you’ll run the application from a Flink Studio notebook. In the second, optional step, you’ll learn how to deploy the application to run outside the notebook.

Overview

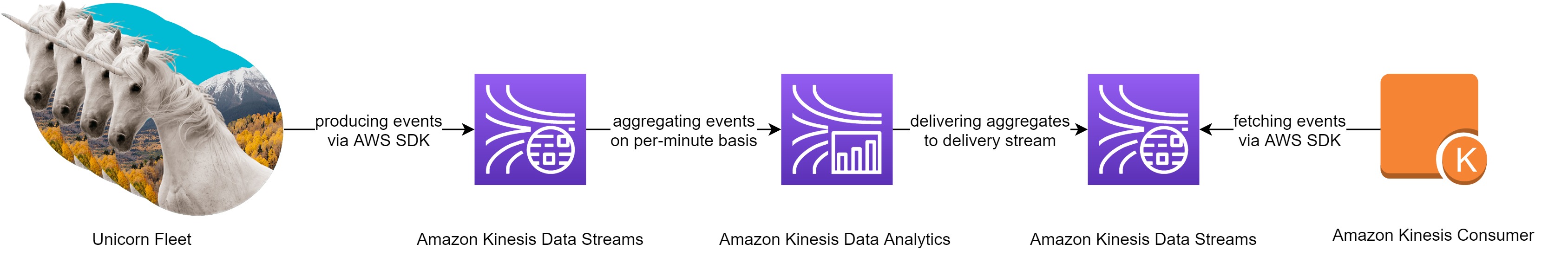

The architecture for this module involves an Amazon Kinesis Data Analytics application, source and destination Amazon Kinesis streams, and the producer and consumer command-line clients.

The Amazon Kinesis Data Analytics application processes data from the source Amazon Kinesis stream that we created in the previous module and aggregates it on a per-minute basis. Each minute, the application will emit data including the total distance traveled in the last minute as well as the minimum and maximum readings from health and magic points for each unicorn in our fleet. These data points will be sent to a destination Amazon Kinesis stream for processing by other components in our system.

During the workshop, we will use the consumer.go application to consume the resulting stream. To do so, the application leverages the AWS SDK and acts as Kinesis Consumer.

Next

✅ Please proceed to Implement the Streaming Application to learn how to develop a Flink analytics application using the Studio notebooks.

✅ Depending on your time or interest you can skip this module and proceed to Data Lake, wherein you’ll deliver the raw stream data from the Kinesis stream to Kinesis Data Firehose for delivery to Amazon S3 and use Amazon Athena to query that raw data.